[Scraping]: Basics

scraping

09/26/2019

Web scraping allows to extract large amounts of data from websites. I'll use my github page as an example. We'll use Node.js with Puppeteer for web scrapping. Install Node.js & Puppeteer

1. Decide which information to extract

As an example, I'll try to extract the inner HTML texts in the header tab: About, Posts, SQL, Spring, Swift

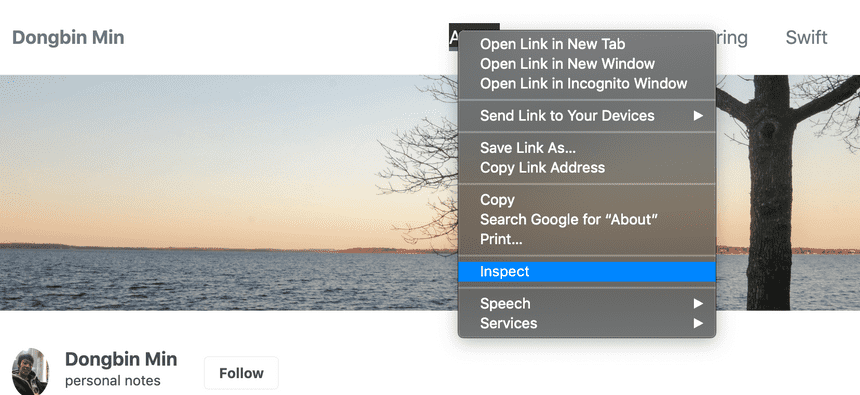

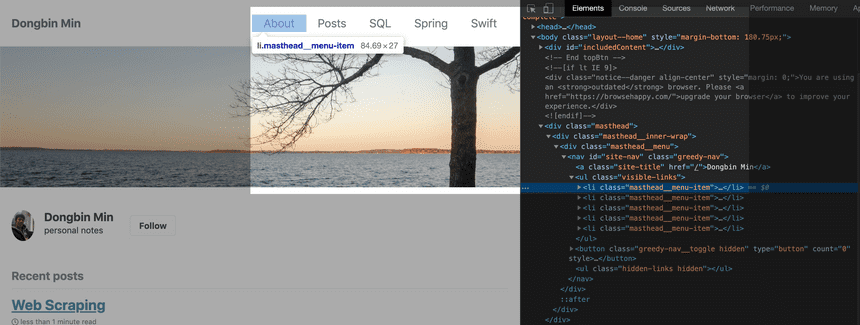

2. Inspect the elements on page

Right click the element to inspect elements or open debugging console by pressing F12 button. Then, you'll notice that each navigation item is placed in li tag with a class name masthead__menu-item

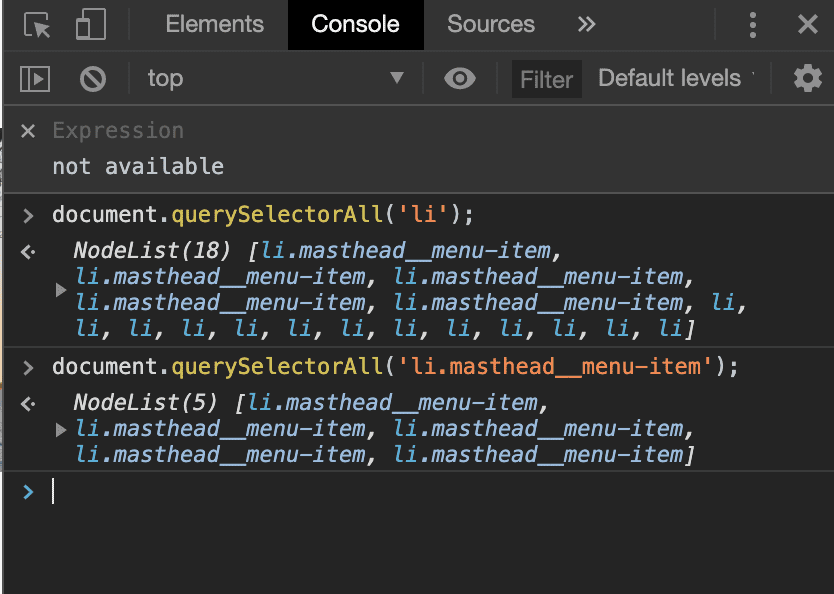

3. Verify & Select items

Verify

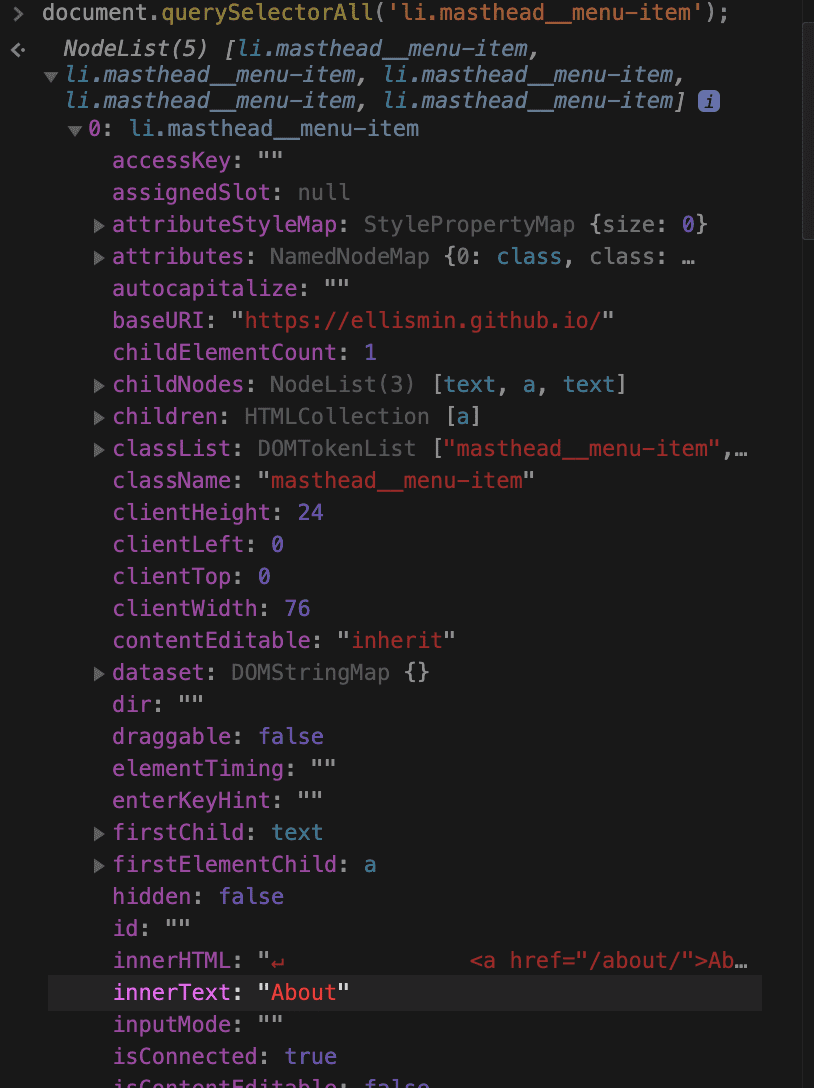

Move to console and select the specified elements

document.querySelectAll('li.masthead__menu-item');To find certain elements on current page. Here, we find five elements within li tag with class name masthead__menu-item

Expand the list to see their values. You'll notice each li.masthead__menu-item componenet has its innerText values that we were interested in.

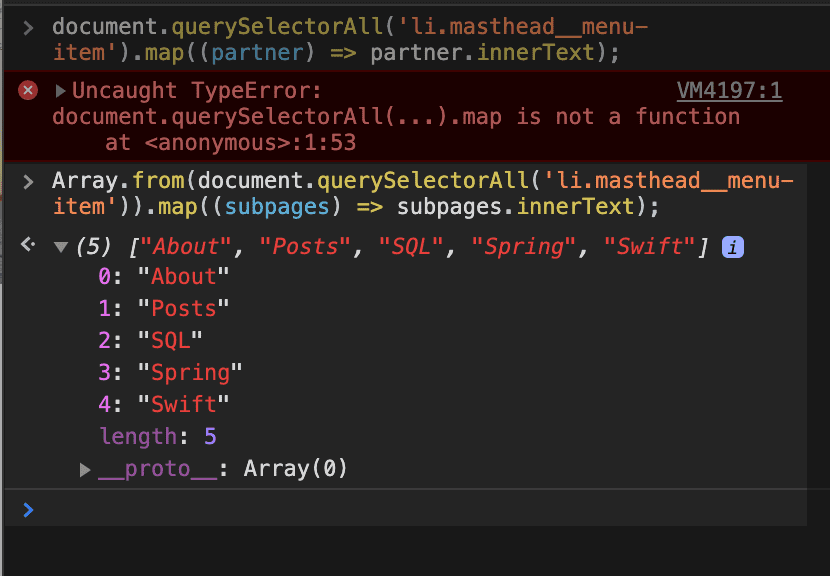

Select innerHTML

Get a copied array of innerHTML texts from navbar items

Array.from(document.querySelectorAll('li.masthead__menu-item')).map((subpages) => subpages.innerText);Note that NodeList needs to be converted into Array. Otherwise, it will trigger an error

4. Use Node.js & Puppeteer for scraping

If not previously done, install Node.js & Puppeteer

const puppeteer = require("puppeteer")

;(async () => { const browser = await puppeteer.launch() const page = await browser.newPage() await page.goto("https://ellismin.github.io")

const navItems = await page.evaluate(() => Array.from(document.querySelectorAll("li.masthead__menu-item")).map( subpages => subpages.innerText ) )

console.log(navItems) await browser.close()})()Insert the query from above step into a js file. Then test it with

$ node scraper.js- Output:

$ node scraper.js[ 'About', 'Posts', 'SQL', 'Spring', 'Swift' ]Output result array into file

;(async () => { const browser = await puppeteer.launch() const page = await browser.newPage() await page.goto("https://ellismin.github.io")

const navItems = await page.evaluate(() => Array.from(document.querySelectorAll("li.masthead__menu-item")).map( subpages => subpages.innerText ) )

writeFile(navItems, "array.txt") await browser.close()})()

// Write array into filefunction writeFile(arr, fileName) { var strToBeWritten = arr.toString.replace(/,/g, "\r\n") var fs = require("fs")

fs.writeFile(fileName, strToBeWritten, function(err) { if (err) { return console.log(err) } console.log("The file was saved!") })}We can output the array into file with above function.

var strToBeWritten = arr.toString.replace(/,/g, "\r\n")arr.toString has array elements with "," in them. With above line of code, file stores each element in array in each line of code. /,/g replaces all occurrences of "," to new line

Output in array.txt:

$ cat array.txtAboutPostsSQLSpringWe have achieved our main goal here. Continue reading if you want to manipulate with stored data

Downloading images in page as file (+request module)

document.querySelectorAll("p>img");Above command in console selects all images on this particular post page. Now, you can modify the navItems array to images.

">" sign specfies all img tags that is right under p tag

;(async () => { const browser = await puppeteer.launch() const page = await browser.newPage() await page.goto("https://ellismin.github.io/webscraping/")

// Get elements const images = await page.evaluate(() => Array.from(document.querySelectorAll("p>img")).map(imgs => imgs.src) )

console.log(images) await browser.close()})()Output:

$ node scraper.js[ 'https://ellismin.github.io/images/webscrape/1.png', 'https://ellismin.github.io/images/webscrape/2.png', 'https://ellismin.github.io/images/webscrape/3.png', 'https://ellismin.github.io/images/webscrape/4.png', 'https://ellismin.github.io/images/webscrape/5.png', 'https://ellismin.github.io/images/webscrape/6.png' ]We have an array of image sources. Now, we can download them.

Request module

Downloading images with image url we've extracted is a lot easier with request module package. To install request module:

$ npm i request;(async () => { const browser = await puppeteer.launch() const page = await browser.newPage() await page.goto("https://ellismin.github.io/webscraping/")

const images = await page.evaluate(() => Array.from(document.querySelectorAll("p>img")).map(imgs => imgs.src) ) downloadImage(images, "imgPrefix")

await browser.close()})()

async function downloadImage(arr, imgPrefix) { var fs = require("fs"), request = require("request")

var download = function(url, filename, callback) { request.head(url, function(err, res, body) { request(url) .pipe(fs.createWriteStream(filename)) .on("close", callback) }) }

// download images with urls in arr for (var i = 0; i < arr.length; i++) { var imgName = imgPrefix + (i + 1).toString() + ".png" download(arr[i], imgName, function() { console.log("image created!") }) }}Scraping recent posts

Let's now try scrapping a multiple items and store them into json format. Try scrapping titles and excerpts of recent posts from main page.

document.querySelectorAll("article")document.querySelecto("article h2.archive__item-title")document.querySelector("article p.archive__item-excerpt")- First line selects all articles which each of them contain a title and excerpt

- Second line selects a title within an article

- Third line selects an excerpt within an article

scraper.js

;(async () => { const browser = await puppeteer.launch() const page = await browser.newPage() await page.goto("https://ellismin.github.io")

const posts = await page.evaluate(() => Array.from(document.querySelectorAll("article")).map(post => ({ title: post.querySelector("h2.archive__item-title").innerText, excerpt: post.querySelector("p.archive__item-excerpt").innerText, })) )

console.log(posts) await browser.close()})()Output:

$ node scraper.js[ { title: 'Web Scraping', excerpt: 'Extracting source elements with Node.js & Puppeteer' }, { title: 'Aggregate Functions', excerpt: 'COUNT • GROUP BY' }, { title: 'Web Scraping Prerequisites', excerpt: 'Use Puppeteer for web scraping' }, { title: 'Table of Contents', excerpt: 'Dynamically generate table of contents with JavaScript' }, { title: 'Refining Selections', excerpt: 'DISTINCT • ORDERBY • LIMIT • LIKE' } ]