[Scraping]: web scraping imgur.com

Node.js

scraping

10/01/2019

Intro

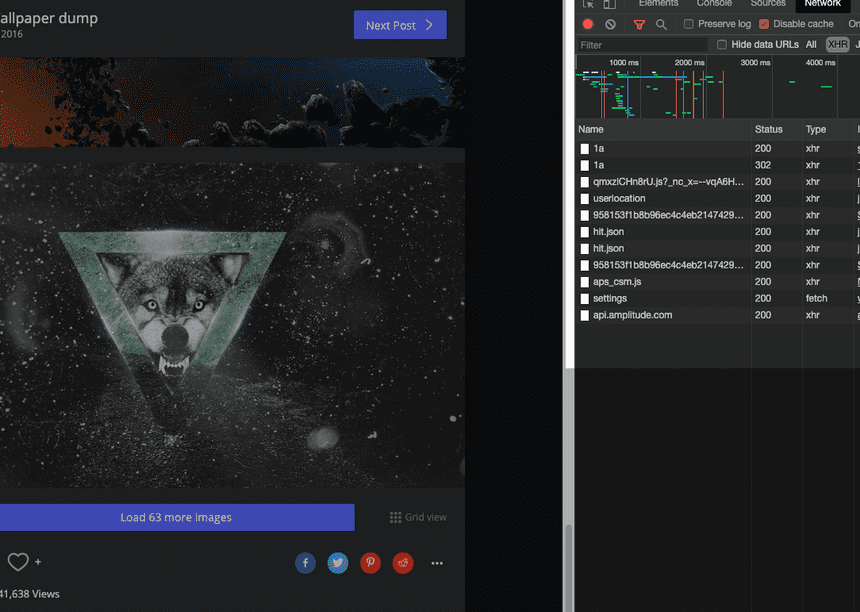

I was going to scrape multiple wallpapers images from imgur website.

With the strategy learned from [Scraping]: Basics, I've tried to query all image src elements from website.

However, imgur.com uses an API which doesn't show all the images at once, but rather it loads the next page on on scrolling

Inspect

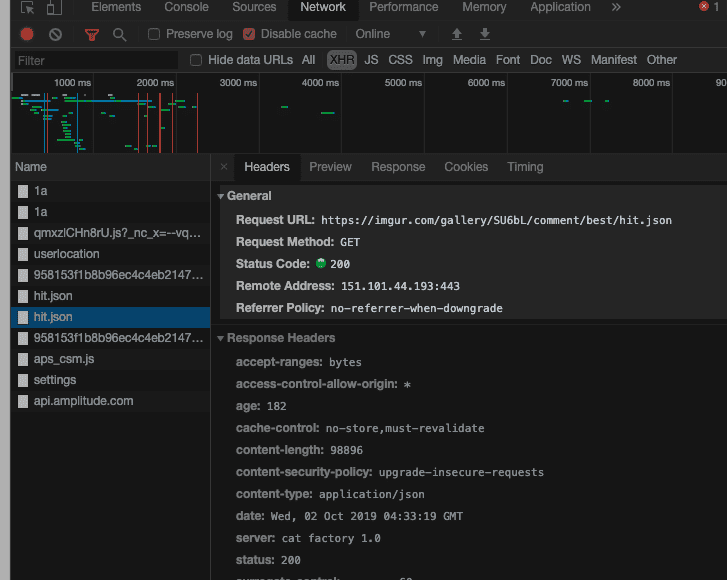

Inspect element (Using Google Chrome). Then move to the Network tab and select XHR.

- XHR filter shows the type of HMLHttpRequest that's built with JavaScript, and it sends the request on loading images.

Browser over to the name of the requests and take a look at JSON file.

This contains the URL where all the name of the images are stored. Let's open the link to retrieve JSON data.

https://imgur.com/gallery/SU6bL/comment/best/hit.jsonGetting data from JSON file

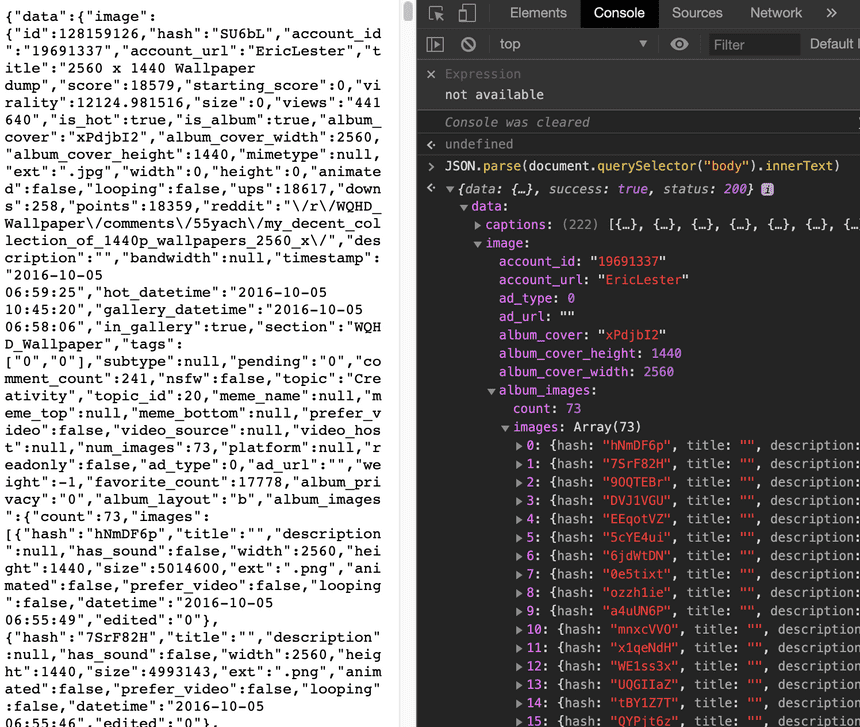

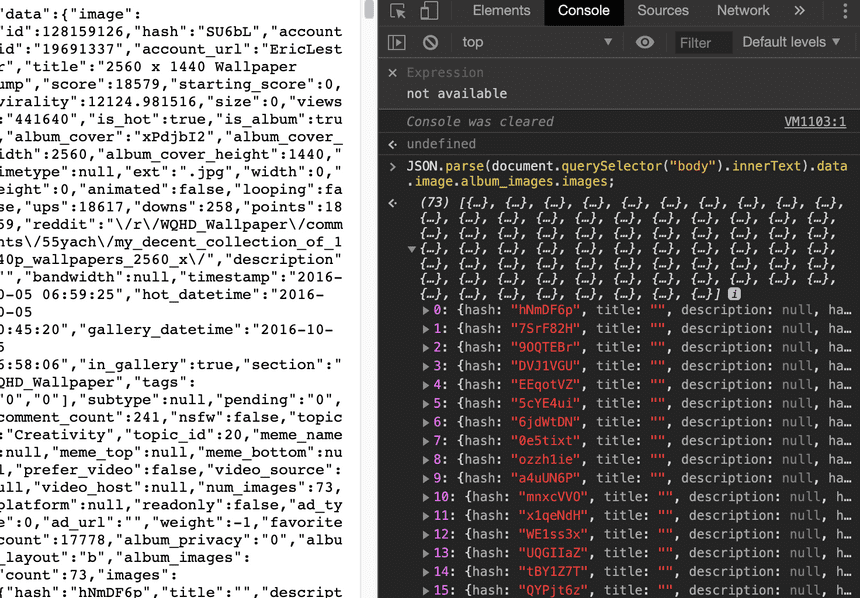

Open the console and type in the following to parse the JSON file.

JSON.parse(document.querySelector("body").innerText)Looking at the parsed data, you will see images are stored under data -> image -> album_images -> images

Type in the following to get these images

JSON.parse(document.querySelector("body").innerText).data.image.album_images .images

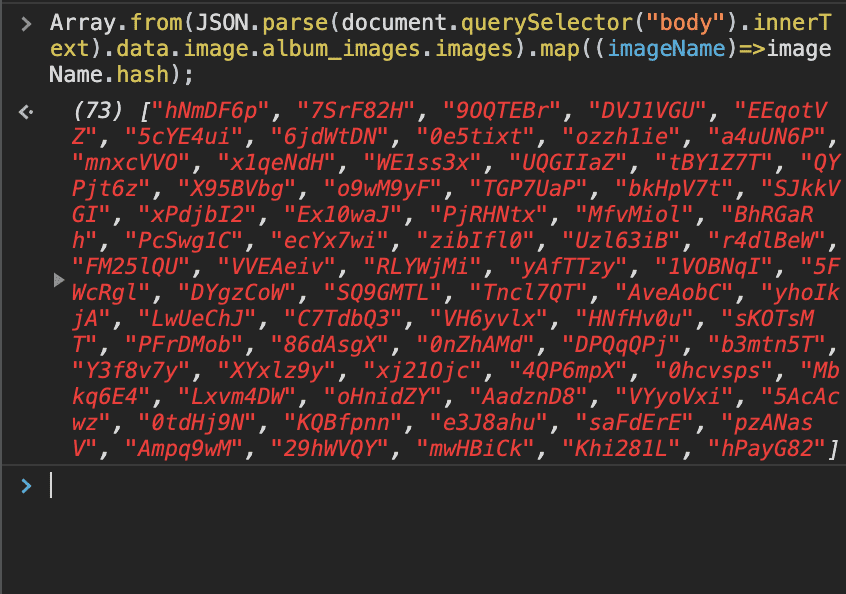

Then map it into array with the following

Array.from( JSON.parse(document.querySelector("body").innerText).data.image.album_images .images).map(imageName => imageName.hash)Now we have extracted image names. Let's use puppeteer to download these images

On Puppeteer

Test the array

async function scrapeJSON() { // Open the browser const browser = await puppeteer.launch({ headless: false, })

const page = await browser.newPage()

await page.goto("https://imgur.com/gallery/SU6bL/comment/best/hit.json")

var content = await page.content()

imageNames = await page.evaluate(() => { return Array.from( JSON.parse(document.querySelector("body").innerText).data.image .album_images.images ).map(imageName => imageName.hash) }) console.log(imageNames) await browser.close()}scrapeJSON()$ node imgurScrape.js[ 'hNmDF6p', '7SrF82H', ....... 'hPayG82' ]We obtained the image names. Let's create the urls on top of downloadImage() function from the last post.

Be sure to have request module available.

var urlPrefix = "https://i.imgur.com/"var urlAffix = ".png"// Create url form arrfor (i = 0; i < arr.length; i++) { arr[i] = urlPrefix + arr[i] + urlAffix}imgurScrape.js

Whole code snippet:

const puppeteer = require("puppeteer")async function downloadImage(arr, imgPrefix) { var fs = require("fs"), request = require("request")

var download = function(url, filename, callback) { request.head(url, function(err, res, body) { request(url) .pipe(fs.createWriteStream(filename)) .on("close", callback) }) }

var urlPrefix = "https://i.imgur.com/" var urlAffix = ".png" // Create url form arr for (i = 0; i < arr.length; i++) { arr[i] = urlPrefix + arr[i] + urlAffix }

// Test downloading one image // download(arr[0], "test.png", function(){ // console.log("image created!!"); // });

// download images with url for (var i = 0; i < arr.length; i++) { var imgName = imgPrefix + (i + 1).toString() + ".png" download(arr[i], imgName, function() { console.log("image created!") /// }) }}

async function scrapeJSON() { // Open the browser const browser = await puppeteer.launch({ headless: false, })

const page = await browser.newPage()

await page.goto("https://imgur.com/gallery/SU6bL/comment/best/hit.json")

var content = await page.content()

imageNames = await page.evaluate(() => { return Array.from( JSON.parse(document.querySelector("body").innerText).data.image .album_images.images ).map(imageName => imageName.hash) }) // console.log(imageNames);/// downloadImage(imageNames, "wallpaper-") await browser.close()}

// RunscrapeJSON()Result